Neuroscientists from UC San Francisco have recently created an artificial intelligence (AI) brain implant that can use brain activity to generate speech using a virtual vocal tract. This study involved participants with intact speech, but the implant could potentially restore vocal function in patients who have lost their ability to speak due to neurological damage. These findings were described in Nature on April 24.

This system developed in the lab of Edward Chang, MD, professor of neurological surgery at UCSF and member of the UCSF Weill Institute for Neuroscience. Their breakthrough research shows that forming a synthesized version of speech that is controlled in their one’s speech centers could be possible. The authors feel this approach could not only give those with speech disabilities a means of communication, but that they may be able to regain some tonal effects of the voice that reflect emotion and personality as well.

“For the first time, this study demonstrates that we can generate entire spoken sentences based on an individual’s brain activity,” said Chang. “This is an exhilarating proof of principle that with technology that is already within reach, we should be able to build a device that is clinically viable in patients with speech loss.”

Several conditions commonly lead to speech inhibiting damage to the nervous system, including brain injury, stroke, Parkinson’s disease, ALS, and multiple sclerosis. The current option for articulating speech in these patients is to synthesize speech one letter at a time using devices that track facial or eye movements, but this is a tedious and inaccurate means of doing so, producing 10 words per minute at best.

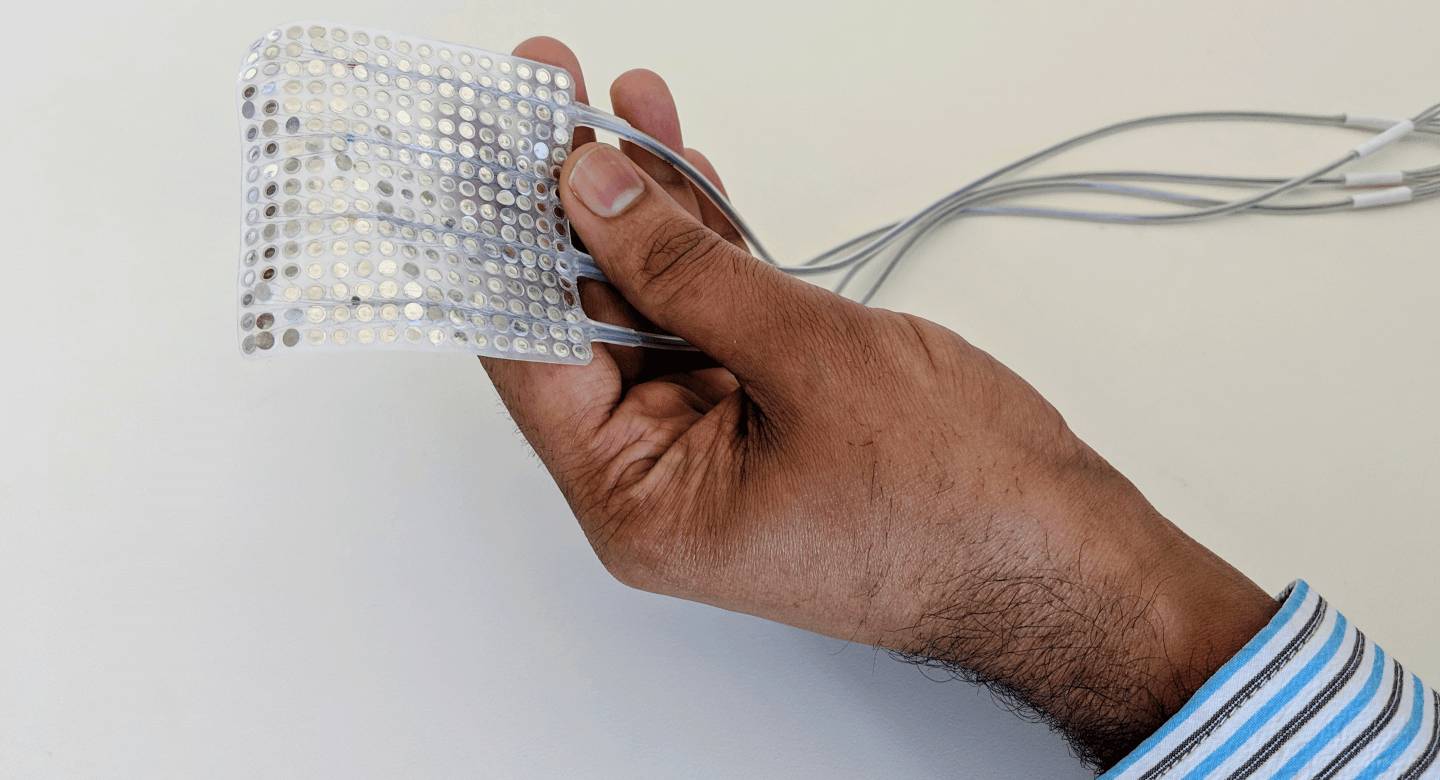

Leaders of the research were speech scientist Gopala Anumanchipalli, PhD, and Josh Chartier, a bioengineering graduate student in the Chang lab. Their study involved five participants who were undergoing intracranial monitoring, a procedure in which electrodes monitor brain activity as part of an epilepsy treatment. Using high-density electrocorticography, the researchers tracked activities of brain areas tethered to controlling speech and movement of the mouth as the volunteers recited several hundred sentences.

“The relationship between the movements of the vocal tract and the speech sounds that are produced is a complicated one,” Anumanchipalli said. “We reasoned that if these speech centers in the brain are encoding movements rather than sounds, we should try to do the same in decoding those signals.”

Instead of transforming the brain signals directly into audio to recreate speech, the team used a two-stage decoding technique. This involved transforming neural activity into movement of vocal-tract articulators, then turning the decoded movement into actual spoken sentences. Each transformation used recurrent neural networks, a type of AI that is effective in analyzing and transforming data with a complex structure.

READ MORE: Gene Therapy Technology Advancing Rapidly

The results of this study yield strong evidence for a brain-complex interface that can generate speech, in terms of both accuracy in reconstructed vocality and the ability of the listeners to understand what is being said. The researchers are hopeful that this technology may one day give those with no voice the ability to speak again.

“People who can’t move their arms and legs have learned to control robotic limbs with their brains,” Chartier said. “We are hopeful that one day people with speech disabilities will be able to learn to speak again using this brain-controlled artificial vocal tract.”

“I’m proud that we’ve been able to bring together expertise from neuroscience, linguistics, and machine learning as part of this major milestone towards helping neurologically disabled patients,” concluded Anumanchipalli.

From #brain –> speech. Remarkable use of #Ai recurrent neural nets to transform brain activity to intelligible speech https://t.co/Ox6ch8hK6I @Nature

by @NeurosurgUCSF‘s @GopalaSpeech @joshchartier Edward Chung @UCSF @UCSFMedicine major implications for patients with paralysis pic.twitter.com/JOcbOfmpjU— Eric Topol (@EricTopol) April 24, 2019

© 2025 Mashup Media, LLC, a Formedics Property. All Rights Reserved.

© 2025 Mashup Media, LLC, a Formedics Property. All Rights Reserved.